Main Content

Motor-planning and coordination in single and joint action

In our daily routine, we are continually performing actions that require coordination between different parts of our body and coordination with our environment. Most of these actions are performed in crowded environments, and obstacles and attentional factors must be taken into account.

We also coordinate our movements in social contexts, i.e., when working with a partner. This coordination is based on predicting the partner’s next action, and on being synchronized in action execution. In football, for instance, players have to predict the moves of their teammates and pass the ball to the player whose predicted position would be optimal to score.

This research area comprises the following fields

- Action planning in various task contexts [learn more]

- Motor coordination in joint action [learn more]

- Shared task representation in joint action [learn more]

Action planning in various task contexts

Principal investigators: Danilo Kuhn, Jan Tünnermann, Anna Schubö

There are often several possible ways to perform reaching or grasping movements toward specific target objects. Humans are usually capable of performing such movements smoothly, fast, and with high accuracy: their action planning seems spontaneous and effortless.

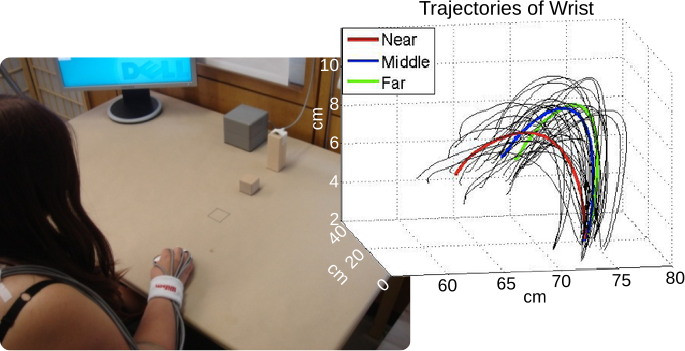

This project explores the principles humans apply when selecting movement parameters in a given task. In dynamical pick-and-place tasks similar to visual foraging (→ visual foraging project), we ask observers to collect items at a high rate. When attentional control, action planning and execution must go hand in hand, several interesting research questions arise, as for example, how adjusting attentional control settings is reflected in action trajectories. To address such questions experimentally, we use setups that allow natural interactions and highly accurate data collection.

Related literature:

Tünnermann, J., & Schubö, A. (2024). Where you attend is where you click: Attention guides selection actions in visual foraging with conjunction objects . Journal of Vision (VSS).

Kuhn, Danilo A., Tünnermann, J., & Schubö, A. (2024). Task complexity and onset of visual information influence action planning in a natural foraging task . Journal of Vision (VSS).

Kuhn, Danilo A., Tünnermann, J., & Schubö, A. (2023). Visual Selection Interacts With Action Planning in Natural Foraging Tasks. Journal of Vision (VSS).

Tünnermann, J., & Schubö, A. (2019). Foraging with dynamic stimuli. Journal of Vision (VSS).

Motor coordination in joint action

Principal investigators: Jan Tünnermann, Anna Schubö

We are also interested in human motor coordination in sequences of actions. We examine how cognition, perception and motor control contribute in situations to accomplish motor coordination when reaching an action goal. Previous research has shown that when humans work together with a partner, they not only represent their own task but also the task of the partner. In coordinating with a partner, humans adapt movement parameters in an anticipatory way to overcome potential difficulties that may arise from the joint performance. In this project, we are interested in identifying the factors influencing the generation of shared task representations and to find out the processing levels of perception and action on which they interact.

Related literature:

Vlaskamp, B.N.S., & Schubö, A. (2012). Eye movements during action preparation. Experimental Brain Research, 216, 463-472. doi: 10.1007/s00221-011-2949-8

Vesper, C., Soutscheck, A., & Schubö, A. (2009). Motion coordination, but not social presence, affects movement parameters in a joint pick-and-place task. Quarterly Journal of Experimental Psychology, 62, 2418-2432. doi: 10.1080/17470210902919067

Shared task representation in joint action

Principal investigators: Dominik Dötsch, Hossein Abbasi, Anna Schubö

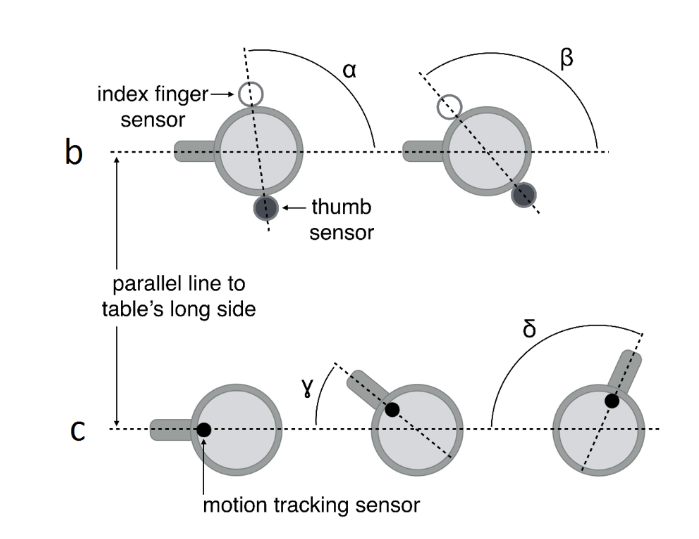

In this project, we are interested in identifying the factors influencing the generation of shared task representations in joint action, and in examining to what extent perception and action interact in joint action tasks. In our paradigms, we study human coordination in naturalistic motor sequences, and examine to what extent an agent’s motor action influences performance of the partner. In addition to behavioral measures, we track finger- hand and arm movements and gaze behavior.

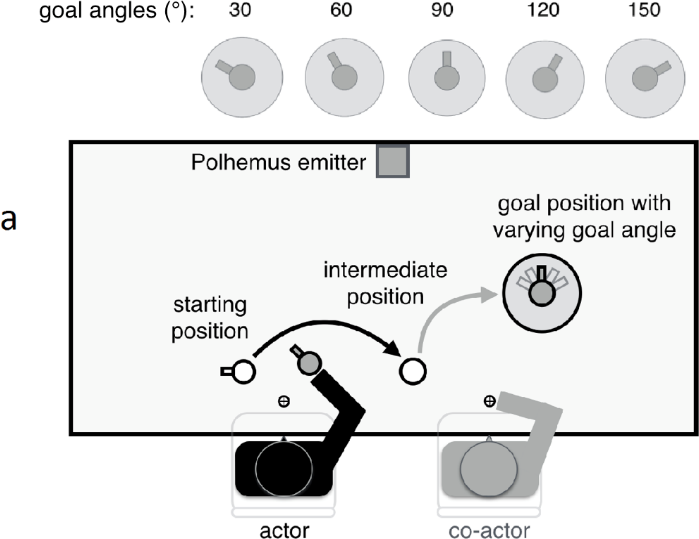

Schematic of the joint pick-and-place task and main dependent measured from Dötsch & Schubö (2015). Participants transported a wooden cup from one end of a table to the other. Hand and finger movements were recorded via motion tracking to assess the agent’s cooperative behavior. Results suggest that agents tend to represent their partner’s end-state comfort and integrate the joint action goal into their own movement planning. Social factors like group membership modulate this tendency.

Our results indicate that humans adapt movement parameters in an anticipatory way to overcome potential difficulties that might arise in reaching the joint action goal efficiently. Social factors such as group memberships and gaze pattern affect cooperative behavior in joint action.

We also observe an action-induced modulation of perceptual processes across joint action partners. Agents seem to represent their partner’s action by employing the same perceptual system they use to represent an own action.

Related literature:

Dötsch, D., Vesper, C., & Schubö, A. (2017). How You Move Is What I See: Planning an Action Biases a Partner’s Visual Search. Frontiers in Psychology. 185, 77. doi:10.3389/fpsyg.2017.00077

Dötsch, D., & Schubö, A. (2015). Social categorization and cooperation in motor joint action: Evidence for a joint end-state comfort. Experimental Brain Research. 233, 2323-2334. doi: 10.?1007/?s00221-015-4301-1